Kimi K2.5 is a huge promise, and barely a few hours after its release, I built a complete working project at what would cost 8–10x more using Claude Opus 4.5

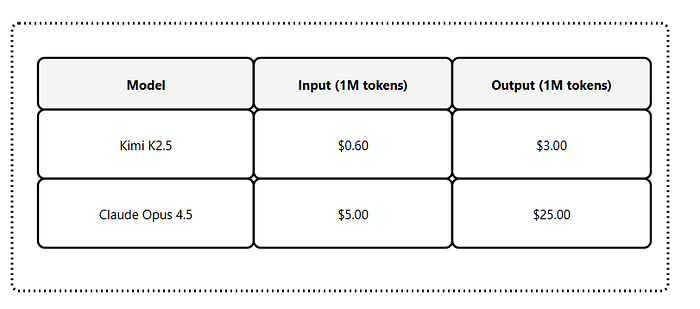

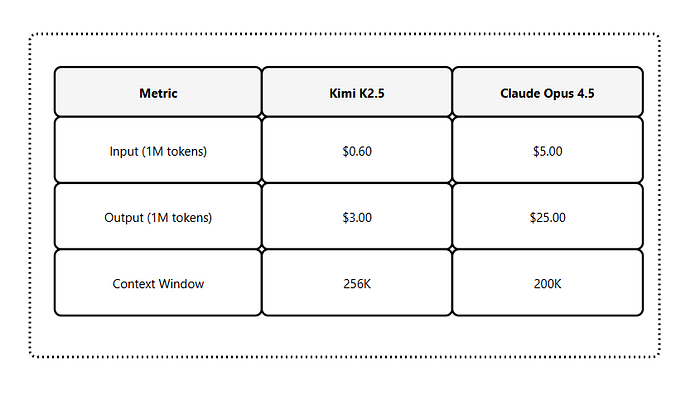

But before you doubt me, here's a simple comparison:

That's roughly 8x cheaper on input and output.

I was reluctant to test at first. The low number of downloads on Hugging Face made me skeptical

With so many other AI models and tools to evaluate, I wanted to give it a pass.

But the Agent Swarm capability and the 1 trillion parameter architecture were too exciting to let go.

If you've followed my recent articles, you know I covered Ollama Launch — the new command that eliminates configuration headaches when connecting Claude Code to alternative models.

That workflow made this test possible in minutes.

In this article, I'll walk you through:

- What makes Kimi K2.5 different (Agent Swarm, native multimodal, 256K context)

- How to set it up with Claude Code using Ollama Launch

- Real testing results from building an actual project

- Cost breakdown and whether the savings justify the switch

Let's see if a 1-trillion-parameter model delivers on its promise.

Kimi K2.5

Kimi K2.5 is Moonshot AI's most intelligent release, built through continual pretraining on approximately 15 trillion mixed visual and text tokens.

1 Trillion Parameters

The scale matters for complex reasoning.

More parameters mean better understanding of nuanced code patterns, architectural decisions, and multi-step problem-solving.

Native Multimodal Architecture

K2.5 was pre-trained on vision-language tokens from the ground up.

It handles images, videos, and text in a unified way.

You can:

- Generate code from UI designs

- Process video workflows

- Ground tool use in visual inputs

Agent Swarm Capability

K2.5 moves from single-agent scaling to a self-directed, coordinated swarm-like execution.

It decomposes complex tasks into parallel sub-tasks executed by dynamically instantiated, domain-specific agents.

In simple terms, give it a complex project, and it breaks it down across multiple specialized agents working together.

256K Context Window

All K2.5 variants support a 256K context window.

That's enough to hold entire codebases in memory during a session.

Thinking Modes

K2.5 supports both instant and thinking modes:

- Instant mode: Fast responses for straightforward tasks

- Thinking mode: Multi-step reasoning for complex problems

You toggle this based on your needs.

Cost

Here's the pricing breakdown:

Now let's set it up with Claude Code.

Setting Up Kimi K2.5 with Ollama Launch

If you followed my previous Ollama Launch article, this will feel familiar.

The setup is identical ; just a different model.

Prerequisites

Make sure you have:

- Ollama v0.15+ installed

- Claude Code installed

- An Ollama Cloud account (for cloud models)

Check your Ollama version:

ollama --version

You need version 0.15.2 or later. If you're behind, update from ollama.com/download.

Step 1: Pull the Kimi K2.5 Cloud Model

Kimi K2.5 is available as a cloud model on Ollama. Run:

ollama pull kimi-k2.5:cloud

Cloud models are lightweight since inference happens remotely and the download is quick.

Step 2: Launch with Claude Code

Here's where Ollama Launch shines. One command:

ollama launch claude --model kimi-k2.5:cloud

Ollama Launch handles everything:

- Sets

ANTHROPIC_AUTH_TOKEN - Configures

ANTHROPIC_BASE_URLto localhost:11434 - Launches Claude Code with the selected model

Step 3: Verify the Setup

Once Claude Code starts, run /status to confirm:

Model: kimi-k2.5:cloud

Auth token: ANTHROPIC_AUTH_TOKEN

Anthropic base URL: http://localhost:11434

If you see

kimi-k2.5:cloudas the model, you're ready.

Context Length Note

Cloud models run at full context length (256K for Kimi K2.5).

If you were using a local model, you'd need to adjust context length in Ollama settings.

With cloud, skip this step.

Testing Kimi K2.5 + Claude Code

With the setup complete, I wanted to test something that would exercise the model's capabilities.

A simple Fibonacci function as we tested in the Ollama Lauch article would not be ideal.

I needed a project that would showcase:

- Multi-file generation

- Code architecture decisions

- Real-world complexity

Test Project: Full-Stack Task Dashboard

I gave Kimi K2.5 this prompt:

Build a task management dashboard with:

- React frontend with Tailwind CSS

- Express backend with REST API

- SQLite database for persistence

- CRUD operations for tasks

- Task categories and priority levels

- Filter and search functionality

- Clean, modern UI

First Impressions

The model started generating immediately.

It created a logical file structure, then built each component systematically.

The response included:

/serverdirectory with Express routes and database setup/clientdirectory with React components- Proper separation of concerns

- Environment configuration file

Speed

The entire project structure — frontend, backend, database schema, configuration files — was generated in under 4 minutes — 3 minutes,18 seconds to be precise.

For context, similar tests with other models other than Claude Opus 4.5 took more than 4 minutes for comparable output.

Agent Swarm

I didn't see "Agent Swarm" anywhere. But the behavior suggested parallel processing.

When I asked for modifications — add user authentication, change the color scheme, add dark mode — the model handled multiple changes simultaneously.

It felt faster than single-threaded responses.

Final Thoughts

Kimi K2.5 with Ollama Launch is the easiest alternative model setup I've tested.

The 1-trillion-parameter model delivers solid code generation at a fraction of the cost. The Agent Swarm capability shows promise for complex, multi-part tasks.

This is very useful when you run out of your Claude Code tokens, as I had in one of my accounts.

Here's what I'm doing for my test projects:

- Kimi K2.5 for scaffolding, boilerplate, and standard patterns

- Claude Sonnet for day-to-day development and debugging

- Claude Opus for complex architectural decisions and tricky problems

Have you tested Kimi K2.5? Leave a comment below with your experience — I'd love to hear what projects you're building with it.

Resources:

- Ollama: ollama.com

- Kimi K2.5 on Ollama: ollama.com/library/kimi-k2.5

- Moonshot AI Platform: platform.moonshot.ai

Claude Code Masterclass Course

Every day, I'm working hard to build the ultimate Claude Code course, which demonstrates how to create workflows that coordinate multiple agents for complex development tasks. It's due for release soon.

It will take what you have learned from this article to the next level of complete automation.

New features are added to Claude Code daily, and keeping up is tough.

The course explores Agents, Hooks, advanced workflows, and productivity techniques that many developers may not be aware of.

Once you join, you'll receive all the updates as new features are rolled out.

This course will cover:

- Advanced subagent patterns and workflows

- Production-ready hook configurations

- MCP server integrations for external tools

- Team collaboration strategies

- Enterprise deployment patterns

- Real-world case studies from my consulting work

If you're interested in getting notified when the Claude Code course launches, click here to join the early access list →

( Currently, I have 3000+ already signed-up developers)

I'll share exclusive previews, early access pricing, and bonus materials with people on the list.

Let's Connect!

If you are new to my content, my name is Joe Njenga

Join thousands of other software engineers, AI engineers, and solopreneurs who read my content daily on Medium and on YouTube where I review the latest AI engineering tools and trends. If you are more curious about my projects and want to receive detailed guides and tutorials, join thousands of other AI enthusiasts in my weekly AI Software engineer newsletter

If you would like to connect directly, you can reach out here:

Follow me on Medium | YouTube Channel | X | LinkedIn